NVIDIA DGX Quantum

The first hybrid quantum-classical control system with low-latency CPU-GPU-QPU high-throughput integration and full NVQLink compatibility

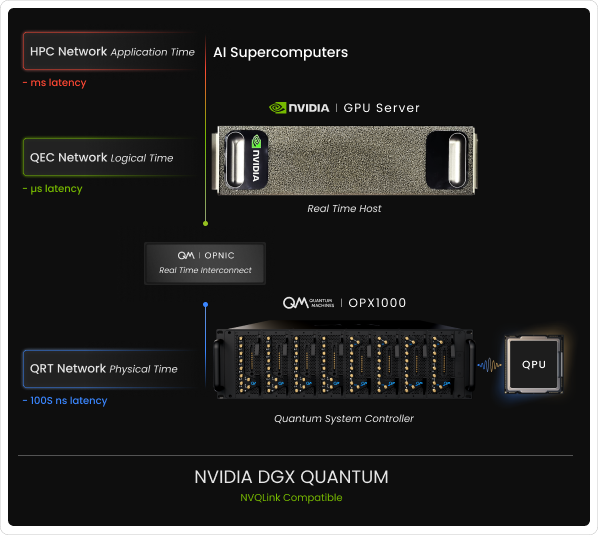

Meet NVIDIA DGX Quantum, the first system to connect a quantum controller directly with the NVIDIA accelerated computing stack, unifies CPU, GPU, and QPU (quantum processing unit) with deterministic low latency for hybrid quantum-classical computing.

Integrating with high-throughput Quantum Machines’ quantum system controller with NVIDIA real-time host acceleration, DGX Quantum brings powerful classical resources into the loop in microseconds, enabling quantum error correction, rapid calibrations, and adaptive experiments. This foundation enables logical QPUs, paving the way for fault-tolerant quantum computing and quantum-accelerated supercomputing.

NVIDIA DGX Quantum is the first architecture designed to tightly couple GPU-CPU superchips to QPUs with bounded-latency integration, so classical acceleration becomes part of the quantum runtime. At its core is Quantum Machines’ OPX1000 Quantum System Controller, powered by QM’s proprietary Pulse Processing Unit. QM’s OP-NIC serves as real-time interconnects and couples the controller to a NVIDIA real-time host, a CPU/GPU server. Together with full programmability via CUDA-Q, DGX Quantum exposes a single programming model for GPU-CPU-QPU integration and demonstrates all the building blocks of NVIDIA NVQLink, the new open platform for real-time quantum-classical orchestration.

NVIDIA DGX Quantum enables resource-heavy computation within quantum sequences, such as compilation and orchestration of quantum workflows, reinforcement learning for real-time calibration of qubit parameters, and quantum error correction decoding at scale.

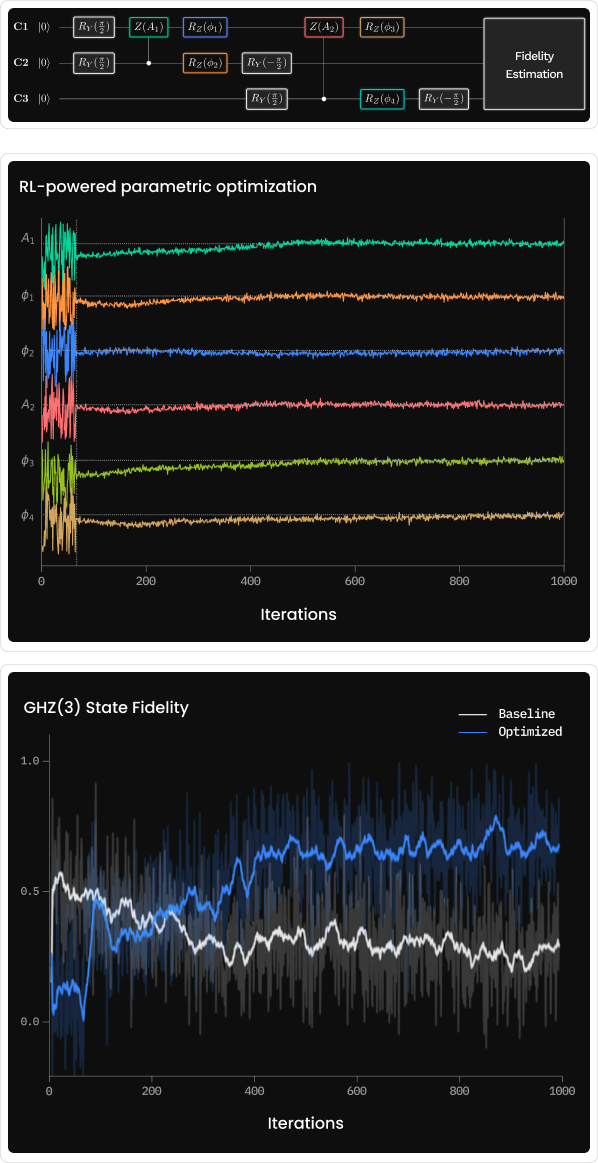

Integrating quantum systems with classical resources is especially valuable for online calibrations, where powerful classical accelerators make it possible to use more computationally demanding methods. With NVIDIA DGX Quantum, calibration routines run as a closed loop inside the experiment: the system coordinates shots, streams readout to the classical accelerator, evaluates optimizers or reinforcement-learning (RL) policies, then updates control parameters before the next shot. On a 3-qubits GHZ routine, the online RL optimizes 6 pulse parameters consistently exceeding the best pre-calibrated baseline, and correcting drifts that static routines miss.

The payoff is practical: fewer manual retunes, faster bring-up, higher and steadier fidelities across long runs, and a template that scales to large QPUs and more complex sequences. This demonstrates how tight GPU-CPU-QPU integration transforms calibration from a one-off, time-consuming step into continuous real-time optimization: clear evidence of the dawn of quantum-accelerated AI supercomputing.

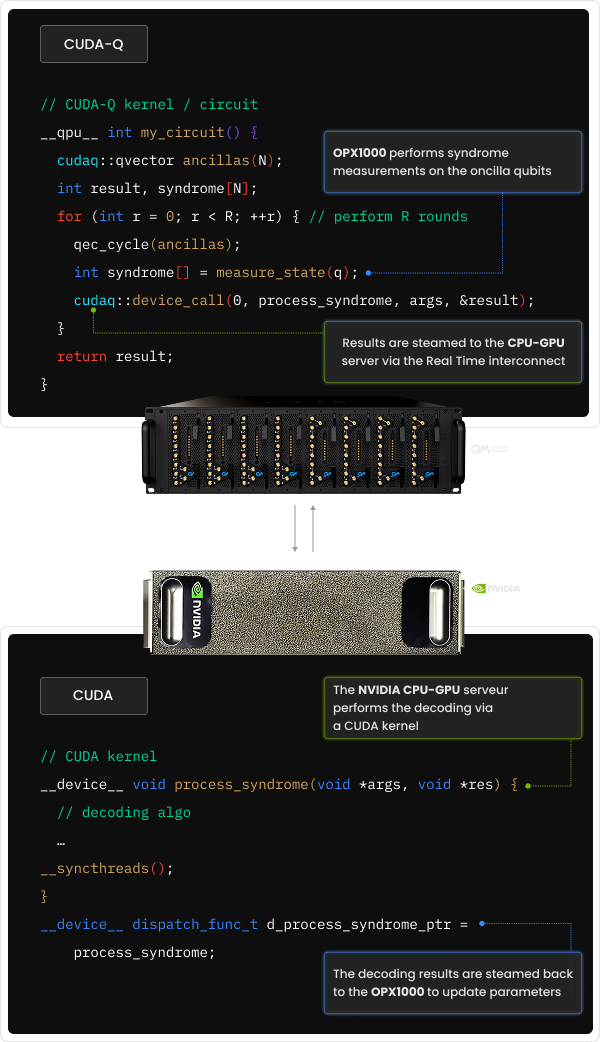

Quantum error correction only works if the decoding speed keeps up with the error rate, and as QPUs scale up, the required classical computation becomes increasingly resource-intensive. NVIDIA DGX Quantum allows QEC decoding to run on high-performance classical accelerators, so the heavy lifting happens on a powerful CPU-GPU server. The quantum system controller runs stabilizer rounds on the QPU, and streams error syndromes (measurement results) to the server. A separate kernel (e.g. CUDA kernel) decodes syndromes and computes corrections, sending the information back to the controller, where feed-forward operations close the loop. The communication roundtrip latency is kept below 4 µs, allowing the decoding time budget to remain below the 10-20 µs limit for useful decoding [Kurman, et al. arXiv:2412.00289 (2024)]. The result of using NVIDIA DGX Quantum to integrate acceleration servers into quantum sequence is real-time QEC that scales: larger codes, deeper rounds, and higher-throughput experiments without rewriting the stack.

Protocols can be written in CUDA-Q: a single, unified hybrid programming language that defines a quantum circuit, data streaming, device calls and the quantum feedback to apply corrections. This keeps real-time quantum orchestration, decoding, and control in one place, aligned with NVIDIA NVQLink’s open model for bounded-latency quantum-classical integration. As codes grow and decoders evolve, classically accelerated QEC decoding offers the scale and flexibility needed for fault-tolerant quantum computing.

The first NVQLink-compatible system for accelerated quantum supercomputing

GPU/CPU to Quantum System Controller integration with rountrip latency of < 4 µs

Unified programming experience with CUDA-Q, with access To pulse level programming via QUA & CUDA integration via device calls

5U rack-mountable system with hot-swappable components, ready for HPC.

Run the Most Advanced Hybrid Algorithms

The only solution designed to support QEC & ultra- fast calibration at scale

Effortless Scalability

Add OPX1000 controllers as your QPU grows, and add GH200 servers to meet increasing computational demands

HPC & Data Center Ready

HPC-QC integration from the hardware quantum control layer and up, designed for data center reliability with redundancies and hot swappable critical parts

Compatible with All Qubit Technologies

OPX1000 controller supports superconducting qubits, spin qubits, neutral atoms, trapped ions, defect centers, photonic qubits and more...

CUDA & CUDA-Q Integrated

DGX Quantum integrates CUDA-Q, NVIDIA’s open-source programming model for co-programming CPUs, GPUs & QPUs

Have a specific experiment in mind and wondering about the best quantum control and electronics setup?

Want to see what our quantum control and cryogenic electronics solutions can do for your qubits?